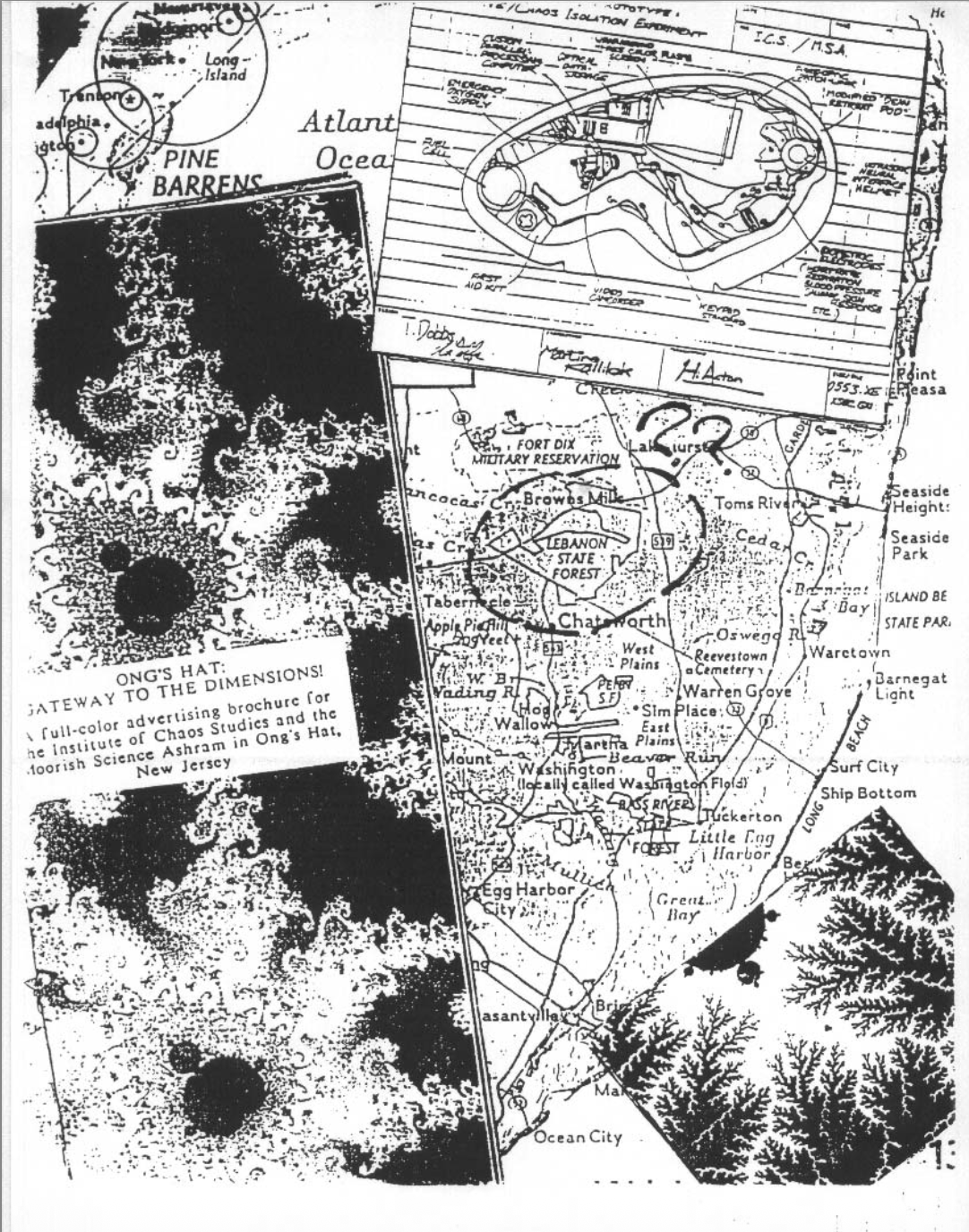

“Rationed Water, 2013” by Jogging (link)

Information takes the shape of its container. This has been a refrain in much of my work lately, inspired by the stream of research on water quality data curation that I started back in 2022. I’m now pleased to report that the first journal article from this line of thinking is finally out in print. The article in question, “Coastal water information orders: Reverse-engineering New York City’s data dashboards,” is now available in Social Studies of Science.

I’ve been looking at source code and other computational texts to understand the production and circulation of knowledge for years now, but in the past I largely focused on software and file forensics. This time, I’ve turned my attention towards the structure of scientific datasets. I was inspired partly by my teaching. Since joining San José State University in 2022, I’ve been teaching a master’s-level course on database design that got me thinking about the ways that data structures influence what counts as knowledge. In other words, I’ve been interested in how the contours of data affect the contours of everyday life.

The work was impacted by my role at SJSU in other ways too. My coauthor, Rachel Paprocki, was a grad student in our program when we wrote the paper together. We met when I was looking to hire a research assistant, but our collaboration continued beyond her initial appointment.

“Rationed Water, 2013” by Jogging (link)

Now that I’m immersed in the ocean of data being generated by coastal researchers, I have several other related projects underway as well. I presented a historical paper about how water quality data curation has changed since the 1990s at RESAW in Germany over the summer, and I hope to publish an expanded version of that paper soon. More recently, I organized a panel with other water data researchers at the 4S conference in Seattle. A few weeks from now, I’ll be at the ASIS&T conference in Washington, D.C. presenting a paper on the interaction between water quality data and geographic information systems.

I have always considered myself a scholar of digital objects’ value as evidence, construed in the broadest possible sense. The digital objects associated with water quality have proven exceptionally generative. Where else can we see so clearly the propensity for digital systems to discretize and stabilize inherently unwieldy and changing phenomena? Bodies of water are constantly moving and changing, such that any data collected about their composition is always already outdated. I suspect most scholars’ research outputs are similar—constantly evolving, but captured in static representations like an article from time to time. You can read the latest such snapshot from me now in Social Studies of Science.